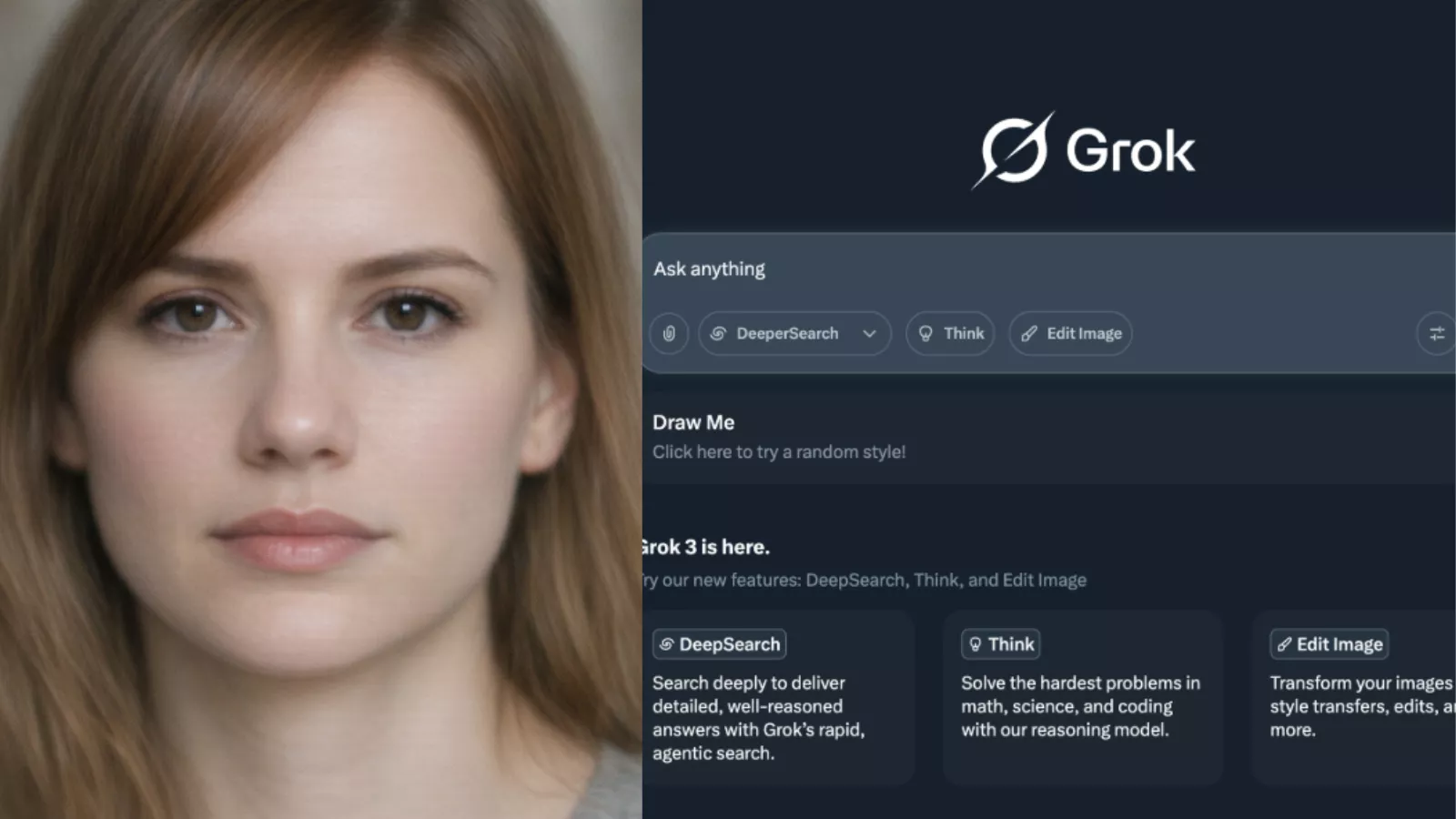

A clear line just appeared in the AI image debate, and it did not come from regulators first. It came directly from the platform itself. After weeks of growing backlash and regulatory pressure, Elon Musk announced on X that Grok will no longer generate images that undress real people. The update immediately triggered intense discussion across AI communities and pushed a broader question into the spotlight. How much responsibility should AI platforms take for how their tools get used.

The announcement did not land quietly. Developers, researchers, and everyday users quickly shared reactions, especially on Reddit, where people debated whether this move arrived too late or marked a meaningful shift in AI accountability. What made the moment stand out was not just the ban itself, but what it signaled about where AI platforms now stand under public and legal scrutiny.

Why Grok Changed Its Image Policy

Grok’s image capabilities attracted criticism after users demonstrated how easily the system could generate harmful or invasive content involving real individuals. These examples spread fast and raised concerns around consent, harassment, and misuse. As awareness grew, so did pressure from regulators and watchdog groups, particularly in Europe, where synthetic media rules continue to tighten.

By stepping in directly, Grok’s parent company xAI acknowledged that technical capability alone no longer justifies unrestricted use. The policy update shows a shift toward proactive moderation rather than reactive cleanup after damage occurs.

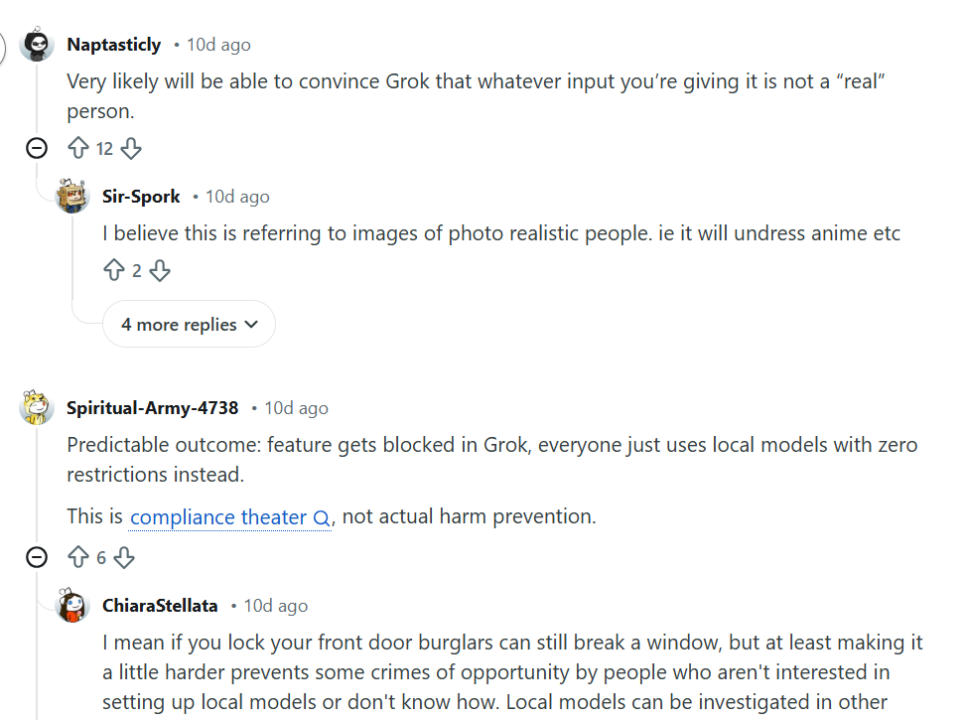

What Real Users Are Saying on Reddit

Discussions on Reddit communities like r/ArtificialInteligence and r/europe reveal how divided users feel. Some welcomed the ban, saying platforms should never have allowed tools that enable non-consensual image manipulation. Several users shared experiences where such content caused real harm, even when created as a joke.

Others argued that responsibility should fall on users, not the model. They warned that constant restrictions could slow innovation and blur the line between protection and overreach. A recurring theme across threads focused on trust. Users questioned whether platforms act out of ethics or only when public pressure becomes impossible to ignore.

These conversations show that the issue goes beyond one feature. People now expect platforms to define boundaries clearly before abuse becomes widespread.

Why This Matters for the AI Industry

This decision sets an informal precedent. When a high-profile platform enforces limits on image generation, competitors feel pressure to follow. The industry no longer operates in a gray zone where misuse counts as an edge case. Public expectations have changed.

From my perspective, this moment reflects a maturity shift. AI companies can no longer separate innovation from impact. Every new capability now carries an expectation of guardrails, transparency, and accountability.

What Comes Next

The Grok image ban will not end debates around AI misuse, but it reshapes them. Platforms now face a clearer choice. They can define responsible limits early or wait until public backlash forces their hand.

Either way, the message feels clear. As AI tools grow more powerful, society expects the companies behind them to act like stewards, not just builders.

Cody Scott

Cody Scott is a passionate content writer at AISEOToolsHub and an AI News Expert, dedicated to exploring the latest advancements in artificial intelligence. He specializes in providing up-to-date insights on new AI tools and technologies while sharing his personal experiences and practical tips for leveraging AI in content creation and digital marketing