Google DeepMind launches Gemini Robotics 1.5, combining AI with physical robots to create smarter, more adaptive machines capable of handling complex real-world tasks.

Key Highlights:

- DeepMind releases Gemini Robotics 1.5 for intelligent robots

- New system makes robots more adaptive to real-world environments

- AI integration enables complex task completion

- Technology bridges gap between artificial intelligence and physical robotics

Google DeepMind Introduces Gemini Robotics 1.5

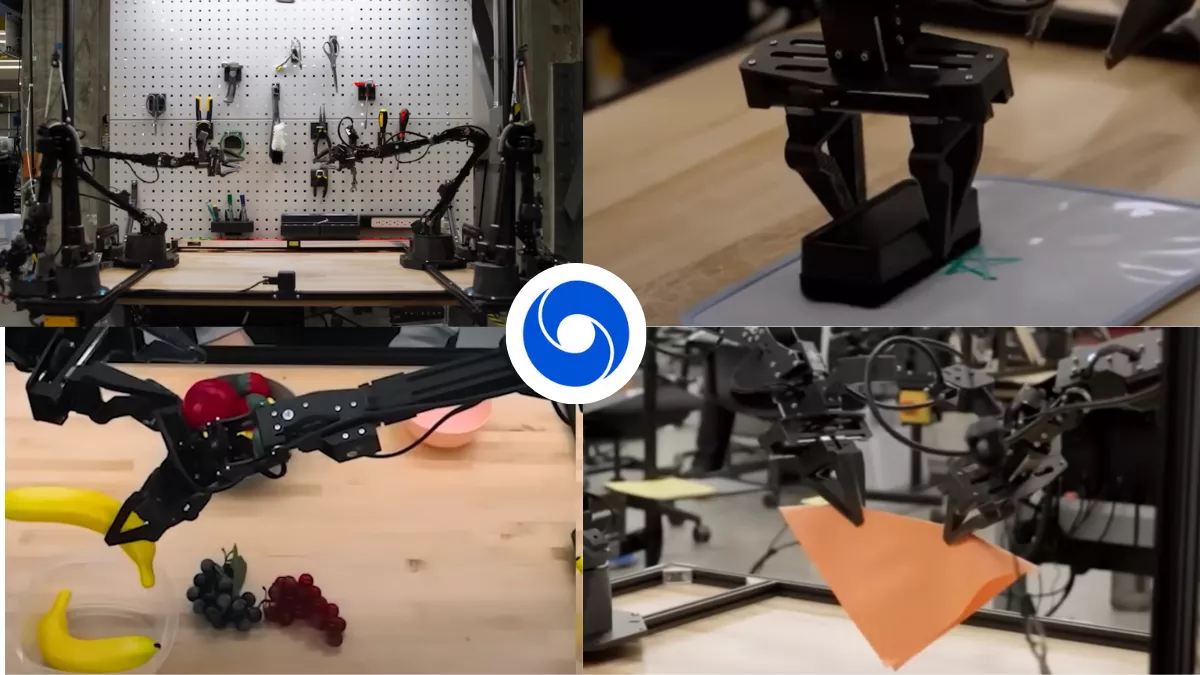

Google DeepMind has launched Gemini Robotics 1.5, a system that combines advanced AI capabilities with physical robotics. The release marks a significant step toward creating robots that can understand and adapt to unpredictable real-world situations.

Making AI Robots Smarter and More Adaptive

Traditional robots follow programmed instructions with limited flexibility. Gemini Robotics 1.5 changes this by integrating AI decision-making directly into robotic systems. The technology allows machines to assess situations, adjust their approach, and solve problems without human intervention.

This adaptability proves crucial for real-world applications where conditions constantly change. A robot using Gemini Robotics 1.5 can handle unexpected obstacles, work with unfamiliar objects, and modify its techniques based on what succeeds or fails.

Real World Task Capabilities with AI Robots in Future

DeepMind designed Gemini Robotics 1.5 for practical applications beyond research labs. The system enables robots to perform complex tasks that previously required human judgment, such as sorting irregular objects, navigating dynamic environments, and coordinating multi-step processes.

Manufacturing facilities, warehouses, and healthcare settings stand to benefit most from these capabilities. Robots equipped with this technology can assist with intricate assembly work, organize inventory in cluttered spaces, or support medical staff with patient care tasks.

How AI Powers Physical Robots Interact with Humans

Gemini Robotics 1.5 processes visual information, spatial awareness, and tactile feedback simultaneously. This multimodal approach mirrors how humans interact with their environment, giving robots a more intuitive understanding of physical space and object properties.

The AI component learns from experience, improving performance over time without requiring new programming. As robots encounter more situations, they develop better strategies for handling similar challenges in the future.

Industry Impact and Future Applications of Physical AI Robots

The launch positions Google competitively in the robotics market alongside companies developing similar AI-driven systems. Industries struggling with labor shortages or hazardous working conditions will find particular value in adaptive robotic solutions.

DeepMind continues refining Gemini Robotics 1.5 through ongoing development, with commercial applications expected to expand as the technology matures and proves reliable across diverse environments.