A quiet but important shift is taking shape in artificial intelligence, and it goes deeper than chatbots getting smarter or faster. The conversation heading into 2026 is increasingly focused on whether AI can actually understand how the real world works and act inside it with purpose. That change in focus is why world models and agentic systems are suddenly at the center of serious discussion.

For years, AI progress mostly meant better prediction and pattern matching. Models learned to generate text, images, and code by recognizing relationships in massive datasets. Now, researchers and builders are pushing toward something more ambitious: AI that understands space, physics, cause and effect, and the consequences of actions. That is what people mean by world models, systems designed to internalize how environments behave rather than just react to inputs.

Why World Models Are Gaining Attention

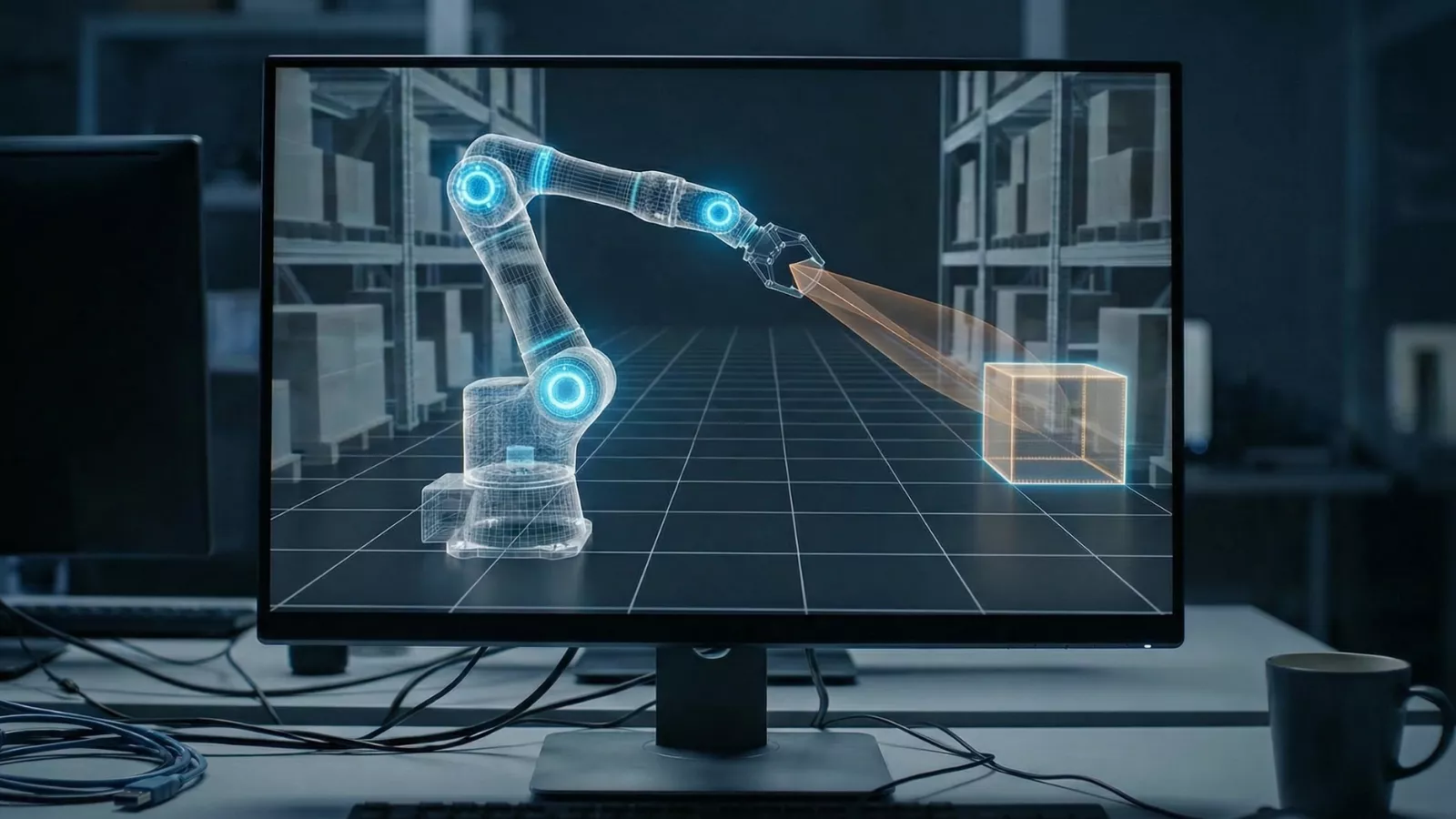

World models aim to give AI an internal representation of reality. Instead of guessing the next word or image, the system builds a mental map of how objects move, how forces interact, and how actions change outcomes. This approach matters because real-world environments are unpredictable, and simple pattern recognition breaks down quickly outside controlled conditions.

As this thinking spreads, world models are being discussed not as research experiments but as foundations for practical systems. The idea is that if an AI understands how the world works, it can plan actions instead of blindly responding. That capability becomes critical when AI leaves the screen and enters physical or operational environments.

Agentic AI Moves From Theory to Practice

At the same time, agentic AI is gaining momentum. These systems are designed to set goals, sequence tasks, and act continuously rather than waiting for constant instructions. When combined with world models, the result is AI that can reason about what to do next and why.

In practical terms, this means AI that can operate tools, navigate spaces, manage workflows, or interact with machines while adapting to change. The shift expected in 2026 is not about flashy demos but about agents that actually function in messy, real-world settings where conditions evolve.

Continual Learning Changes the Timeline

Another factor accelerating this shift is continual learning. Instead of freezing after training, newer systems are being designed to update their understanding over time. That ability allows world models to improve as environments change, which is essential for long-term usefulness.

This combination explains why predictions for 2026 feel different. The narrative is moving away from hype cycles and toward systems that learn, act, and improve while deployed.

What This Signals for the Next Phase of AI

From my perspective, this marks a deeper transition than previous AI waves. Teaching machines how the world works and how to operate within it changes what problems they can realistically solve. It opens the door to AI that participates in the world instead of merely describing it.

That is why so many people are watching this shift closely. It is not just about smarter AI, but about AI crossing from understanding information to understanding reality itself.

Cody Scott

Cody Scott is a passionate content writer at AISEOToolsHub and an AI News Expert, dedicated to exploring the latest advancements in artificial intelligence. He specializes in providing up-to-date insights on new AI tools and technologies while sharing his personal experiences and practical tips for leveraging AI in content creation and digital marketing